Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Multidisciplinary Threats to Emerging Cybersecurity, Legal and Ethical Threats Posed by Deepfake Technology

Authors: Kaif Qureshi, Akshat Verma, Harsh Vachheta, Anant Singh, Zaid Khan Pathan

DOI Link: https://doi.org/10.22214/ijraset.2024.64202

Certificate: View Certificate

Abstract

The creation of remarkably realistic but phony audio and video content through the use of deepfake technology raises a number of issues with cybersecurity, law and ethics. Deep fakes undermine the credibility of online information sources by posing risks including identity theft and the dissemination of false information. Legally speaking, the rise of deepfakes highlights regulatory inadequacies and enforcement concerns while posing difficult considerations about privacy rights and responsibility. Due to the potential for reputational injury and the perpetuation of negative stereotypes, deep fakes raise ethical questions about permission, privacy and harm to society. Deep fakes have become more common as a result of artificial intelligence\'s quick development which calls for preventative steps to counter new dangers. To effectively secure against misuse and maintain the integrity of digital material, this research paper proposes for a multidisciplinary strategy that takes legal, ethical and cybersecurity concerns into account.

Introduction

I. INTRODUCTION

Deepfakes are a type of synthetic media in which people are artificially intelligently manipulated or replaced inside pre-existing photographs or movies. Producing modifications or fabrications that are almost identical to real content is the main objective of deepfakes. This advanced technology combines and manipulates visual and aural components using deep learning algorithms, generative adversarial networks (GANs). The outcome is multimedia that is both incredibly persuasive and frequently misleading. Effective detection and verification procedures are urgently needed in light of the growing concerns around disinformation and privacy arising from the growth of deepfake technology.

II. BACKGROUND

A. How DeepFakes works

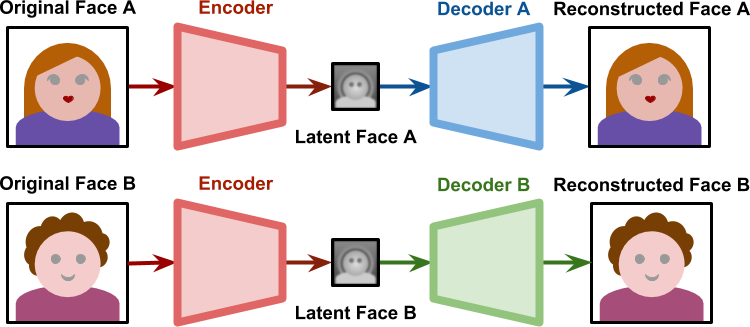

Deepfake technology is the term for content creation that is incredibly realistic yet completely faked using artificial intelligence and machine learning. This system uses a variational auto-encoder (VAE), a deep learning network that compresses and encodes input data into a lower-dimensional latent space, including face traits. Furthermore, by improving the quality of created material, Generative Adversarial Networks (GANs) are essential in making deepfakes more realistic. The steps involved in producing a deepfake image are gathering data, training the model, generating a latent representation and switching out faces. However, because deepfake technology may be abused to distribute false information and abuse images, it presents serious ethical questions.

III. EVOLUTION OF DEEPFAKE

The genesis of deepfakes may be found in scholarly investigations on artificial intelligence and image processing carried out during the 1990s. Deepfakes did not, however, become well-known until the middle of the 2010s. An important turning point was the development of neural networks and the introduction of Generative Adversarial Networks (GANs) in 2014. These GANs were developed by Ian Goodfellow and others and deepfake technology was built upon them. This made manipulations increasingly more complex and realistic possible.

IV. POSSIBLE USES AND IMPLICATIONS

A. Entertainment

Deepfake technology has transformative potential in the entertainment industry by creating immersive and innovative experiences. Imagine movies that feature historically significant figures brought to life with remarkable accuracy, allowing audiences to engage with history in a visually compelling way. For instance, deepfake technology could recreate the likeness of a historical figure like Albert Einstein for a biopic, enhancing educational and entertainment value. Additionally, personalized advertisements featuring celebrities can create more engaging and targeted marketing campaigns. A brand might use deepfake technology to have a favorite celebrity endorse their product, tailored to individual viewers' preferences. Internationally, this technology can bridge cultural gaps by allowing global audiences to experience content in their native languages or with familiar faces, making media more relatable and engaging. However, it is crucial to address the ethical implications of using such technology to ensure that it respects the rights and dignity of individuals.

B. Education

In education, deepfake technology offers groundbreaking possibilities for creating realistic simulations and enhancing learning experiences. For language learning, deepfakes can provide immersive conversational practice with virtual native speakers, helping students improve their pronunciation and comprehension in a more interactive way. Historical reenactments can be brought to life, allowing students to witness and engage with historical events in a dynamic and visually stimulating manner. For medical training, deepfakes can simulate complex surgical procedures or patient interactions, providing students with valuable hands-on experience without the risks associated with real-life practice. Internationally, these applications can offer standardized, high-quality educational resources to diverse learners, overcoming geographical and economic barriers. However, it is important to maintain educational integrity and ensure that deepfake content is accurate and used responsibly to support genuine learning outcomes.

C. Accessibility

Deepfake technology holds significant promise for improving accessibility by bridging communication gaps for individuals with disabilities. For example, deepfakes can translate spoken content into sign language, creating real-time, visually accessible communication for the deaf and hard of hearing community. Additionally, deepfakes can be used to describe visuals for the blind or visually impaired, enhancing their ability to understand and interact with visual content. Internationally, these applications can help create more inclusive environments by making information and experiences accessible to a broader audience. For instance, a deepfake-powered tool could narrate and describe images in educational materials or public service announcements, ensuring that visually impaired individuals have equal access to essential information. By focusing on accessibility, deepfake technology can contribute to a more equitable society, though careful attention must be given to ensuring that these tools are accurate and sensitive to user's needs.

D. Privacy

Deepfake technology can play a crucial role in enhancing privacy and security, particularly in protecting vulnerable individuals. For example, deepfakes can be used to anonymize whistleblowers or witnesses, ensuring their identities remain confidential while still allowing them to provide valuable testimony or information. This application can be especially important in high-profile legal cases or investigations where personal safety is a concern. Internationally, this technology can help protect individuals in politically unstable regions or those facing threats due to their involvement in sensitive issues. However, the use of deepfake technology for privacy protection must be handled with caution to prevent misuse and ensure that ethical standards are upheld. It's essential to implement robust safeguards to prevent the technology from being exploited for fraudulent or harmful purposes, while still leveraging its potential to enhance personal security and confidentiality.

V. IMPACT

A. Misinformation and Disinformation

Deepfakes blur the line between reality and fabrication, making it possible to create convincing yet false narratives. They can be used to manipulate public opinion by portraying politicians, celebrities or ordinary people saying or doing things they never actually did. This capability threatens to undermine trust in media, disrupt democratic processes and harm social cohesion. The spread of misleading content can have profound consequences for journalism and public trust making it crucial to develop countermeasures to address this challenge.

B. Privacy Erosion

Deepfakes present significant risks to personal privacy by superimposing faces onto explicit or compromising content. This can result in reputational damage, emotional distress and extortion.

The ability to manipulate visual evidence undermines trust in both legal systems and personal relationships highlighting the need for enhanced protections and safeguards against misuse.

C. Security Vulnerabilities

Industries that rely on facial recognition, such as banking and border control, face new security challenges due to deepfakes. These technologies can potentially bypass authentication measures, posing risks to both national security and individual safety. As deepfakes become more sophisticated, it is crucial to strengthen security protocols to prevent exploitation.

VI. EXAMPLES OF DEEPFAKE

- Star Wars: Luke Skywalker Deepfake: Fans initially celebrated the appearance of a youthful Luke Skywalker in The Mandalorian but a deepfake by YouTuber Shamook showcased even more impressive possibilities. By using the visage of a younger Mark Hamill, Shamook's work highlighted the advanced capabilities of deepfake technology in recreating beloved characters with remarkable authenticity pushing the boundaries of visual effects and fan engagement.

- Deepfake Misuse? Slowed Down Pelosi Speech: While not a deepfake, an altered video of House Speaker Nancy Pelosi was slowed down to make her appear to slur her words. This manipulation demonstrated how easily reality can be distorted, showcasing the potential misuse of video editing technology to mislead and deceive.

- Funny Deepfake: Peele Portraying Obama: BuzzFeed created a humorous public service announcement by deepfaking actor Jordan Peele as Barack Obama. The video cleverly edited Peele's face onto Obama's body using deepfake technology to deliver a comedic yet impactful message about the potential for misuse of such technology.

- US Deepfake Example: Fake Zuckerberg Video: A deepfake video of Facebook CEO Mark Zuckerberg was created by artist and activist David Leavitt. The video depicted Zuckerberg making unsettling claims about data privacy, using deepfake technology to make a statement on issues surrounding privacy and social media thus illustrating how deepfakes can be used to provoke thought or critique societal issues.

VII. CHALLENGES IN DETECTING ADVANCED DEEPFAKES

- Deepfake Advancement: The techniques used to create plausible fakes are becoming more advanced along with deepfake innovation. As generative models such as Generative Adversarial Networks (GANs) get more sophisticated, it becomes increasingly challenging to distinguish between real and fraudulent information.

- Increased Authenticity and Quality: The creators of deepfakes always strive to improve the level of authenticity and quality of their creations. Traditional discovery techniques struggle against deepfakes, which now include realistic facial emotions, lip synchronization, and flawless foundation incorporation.

- Low-Quality Source Fabric: Grainy or pixelated movies are examples of low-quality source fabric that can be used to create deepfakes. Conventional approaches could struggle to discern between artifacts created by deepfakes and those displayed by poor-quality footage.

- Variety of Deepfakes: Deepfakes may take many different forms, such as voice cloning, face swapping, and full-body transformations. It is difficult to develop a single location approach because deepfake algorithms have different characteristics.

- Limited Information Preparation: A sizable dataset including both real and restricted substances is needed to prepare deepfake discovery models. It is challenging to compile a comprehensive dataset that addresses the vast range of possible deepfakes.

- Instant Sharing: Deepfakes can be rapidly created and disseminated across the internet making their impact both widespread and immediate. The ease with which deepfake content can be produced and shared amplifies its potential to mislead, manipulate or damage reputations. To mitigate the effects of deepfakes, real-time detection and response become critical. The speed of deepfake creation and distribution necessitates advanced technologies and strategies for quick identification and intervention. Without prompt action, the rapid spread of deepfakes can lead to significant harm, reinforcing the need for effective monitoring and countermeasures in the digital landscape.

These inconsistencies may take the form of unnatural blinking patterns, slight misalignments in facial features during movement or speech patterns that differ from the source speaker. As deepfake technology continues to advance, so too do the methods of detection, creating an ongoing arms race between those who create these manipulated media pieces and those who seek to defend against them. The ultimate goal is to develop real-time detection capabilities that can identify and flag deepfakes before they are widely disseminated, thereby safeguarding against the spread of misinformation and manipulation.

VIII. FEASIBILITY OF DEEPFAKE DETECTION

It could still be able to identify poorly produced deepfakes with the unaided eye as of right now. Dead giveaways that are normally simple to identify include the absence of human characteristics, such as blinking and elements that could be inaccurate, including incorrectly oriented shadows. However, as GAN processes evolve and technology advances, it will soon be difficult to distinguish between legitimate and fake videos. With time, the first GAN component—the one that produces forgeries—will get better. To continually train the AI so that it grows better and better is what machine learning (ML) is about. It will eventually surpass our ability to distinguish between the true and the phony. In fact, experts predict that fully authentic digitally altered recordings will be available in as little as six months to a year. For this reason, efforts to develop AI-based defenses against deepfakes are still being made. However, as technology is always developing, these defenses must also. Recently, a group of corporations including Microsoft and Facebook, as well as several prestigious American colleges, came together to launch the Deepfake Detection Challenge (DFDC). The goal of this project is to spur scientists to create tools that can identify when artificial intelligence has been used to tamper with footage.

IX. DEEPFAKES: EVOLVING CYBERSECURITY THREAT

Realistic AI-generated movies and sounds, or "deepfakes" present a significant danger of criminality. They may be used to trick individuals “social engineering" and steal identities "character burglary". Imagine receiving a fraudulent financial order through a deepfake CEO or a phony video call from a loved one seeking money.

Differentiating between the genuine and the fake is getting harder as deepfakes get more realistic. This poses a particular risk to enterprises. Even if discovered later, a deepfake of a corporate official might harm their reputation. Phishing attempts can also utilize deepfakes to fool employees into divulging private information or money. To counter this threat, businesses must modernize their security systems, fusing technology with personnel education and verification procedures. Cybersecurity in today's digital environment has to incorporate safeguards against deepfakes' ability to deceive.

X. STRATEGIES FOR SAFEGUARDING AND COUNTERING DEEPFAKES:

A. Developing a Countermeasure for Deepfakes

- AI: A Two-Sided Blade: Although deepfakes are dangerous, there is a way to counter them using technology. AI not only produces deepfakes but also advances advanced detecting techniques. Inconsistencies in audio and video that are undetectable to humans, such as abnormalities in face movement can be recognized by machine learning algorithms. A digital fortress resistant to manipulation is being built by this continuous evolution.

- Encouraging the People: Power comes from Knowledge: Education is essential. Beyond awareness, we must cultivate media literacy. People may learn how to assess content critically through workshops and online courses. Individual empowerment helps us create a society that is more resilient. Promoting critical thinking is a responsibility shared by individuals, tech firms and media groups.

- Establishing Trust: Securing the Truth: Reliable techniques for content verification are crucial. Content integrity may be guaranteed by methods such as blockchain and digital watermarking. Watermarks validate authenticity and source information by acting as invisible stamps. Blockchain generates an impenetrable trail of a file's creation and modifications. By using these techniques, the battle against deepfakes is strengthened.

- The Legal Shield: Determining Limits: The struggle even reaches the legal sphere. Governments must enact legislation to safeguard privacy, prohibit malevolent deepfakes, and establish moral guidelines for the application of AI. The global character of the digital world necessitates that these frameworks be flexible and collaborative across national boundaries. A worldwide agreement is necessary to successfully combat deepfakes.

XI. INITIATIVES BY GOVERNMENTS, ORGANIZATIONS AND TECH COMPANIES

- Legislation and Regulation: Governments worldwide are actively developing laws to address the challenges posed by deepfakes. In California, Assembly Bill 730 requires manipulated media to be clearly labeled aiming to prevent misinformation and protect privacy. Similarly, the European Union's Digital Services Act is working on regulations that mandate transparency for AI-generated content including deepfakes. These legislative efforts focus on safeguarding personal privacy and intellectual property while combating malicious uses. Effective regulation will require ongoing collaboration between lawmakers, technology companies and legal experts to ensure that laws remain relevant and adaptable to technological advancements.

- Research and Development: Significant efforts are underway in research and development to combat deepfakes. The Deepfake Detection Challenge, organized by Facebook, encourages innovation in creating algorithms that can accurately identify manipulated media. Research institutions like MIT and Stanford are also developing advanced tools and techniques for deepfake detection. These initiatives are crucial for staying ahead of the evolving technology used in deepfakes. By advancing detection methods and creating new technologies, researchers aim to provide robust solutions to counteract the potential threats posed by deepfakes.

- Education and Awareness: Raising public awareness about deepfakes is essential for mitigating their impact. Campaigns by organizations such as the Cybersecurity and Infrastructure Security Agency (CISA) focus on educating individuals about the existence and risks of deepfakes. Technology companies like Google also offer resources to help users identify manipulated content. For example, Google’s Fact Check Explorer helps verify the authenticity of information. These educational efforts are vital for equipping people with the knowledge to recognize and question deepfakes thereby reducing their spread and influence.

- Partnerships and Coalitions: Tackling deepfake challenges requires collaborative efforts across industries. Initiatives like the Global Deepfake Alliance unite technology companies, academic researchers and policymakers to address the issue. This coalition fosters cooperation to develop effective detection technologies and regulatory frameworks. By sharing expertise and resources, these partnerships aim to enhance deepfake countermeasures and address the complexities of the technology. Cross-sector collaboration is essential for creating comprehensive strategies and ensuring that responses to deepfake threats are both effective and adaptable to new developments.

XII. FUTURE SCOPE

A. Better Detection Tools

Investing in research to advance deepfake detection algorithms is crucial. Developing real-time tools that can identify manipulated content across various platforms helps in quickly addressing deepfake threats. Enhanced detection technologies will enable more effective monitoring and verification of media ensuring that manipulated content is identified and managed promptly to mitigate its potential impact.

B. Standardization and Certification

Establishing industry standards for deepfake detection and authentication is essential for consistency and reliability. Certification programs can help verify the authenticity of media content providing a clear framework for identifying genuine versus manipulated content. These standards and certifications ensure that detection methods are robust and universally accepted.

C. Ethical Standards for Content Development

Encouraging content creators to adhere to ethical guidelines when using deepfake technology promotes responsible use. Transparency in content creation and clear disclosure of manipulated media help prevent misuse and build trust with audiences. Responsible practices ensure that deepfake technology is used ethically and does not contribute to misinformation or harm.

D. Collaboration with Social Media Platforms

Social media companies play a key role in combating deepfakes on their platforms. They should implement robust reporting mechanisms that allow users to flag suspicious content. Active collaboration between social media platforms and other stakeholders is necessary to address the spread of deepfakes and ensure that effective countermeasures are in place.

E. International Cooperation

Addressing the global challenge of deepfakes requires international cooperation. Sharing best practices, research findings and regulatory approaches across borders enhances the ability to tackle deepfake threats effectively. Global collaboration ensures a unified response and leverages diverse expertise to develop comprehensive strategies for managing and mitigating deepfake issues worldwide.

XIII. LEGAL FRAMEWORKS AND REGULATORY CHALLENGES

- Privacy and Consent: Deepfakes often manipulate someone’s likeness without their consent raising significant privacy issues. Current laws need to address how personal data is used particularly concerning the creation and dissemination of deepfake content. Regulations must ensure that individuals have control over their likeness and protect against unauthorized use. Privacy laws should be updated to cover deepfakes and safeguard personal data from exploitation in digital media.

- Intellectual Property (IP) Rights: Deepfakes can infringe on intellectual property rights, including copyrights and trademarks, by using someone else’s work without permission. This creates confusion between original and manipulated content. Legal frameworks must evolve to protect creators’ rights and address the unauthorized use of their work in deepfakes. Adapting IP laws to the digital age is crucial to ensure creators' intellectual property is respected and protected.

- Defamation and Reputation Damage: Deepfakes can damage an individual’s reputation by portraying them in false or harmful scenarios. Current defamation laws may need revisions to address this new form of digital deception. Legal frameworks should be adapted to consider the unique challenges posed by deepfakes, ensuring individuals have recourse if their image is manipulated to harm their reputation. Effective legal protections are essential for addressing deepfake-related defamation.

- Authentication and Trust: As deepfakes become increasingly convincing, verifying the authenticity of media becomes more challenging. Regulations should promote transparency and establish mechanisms for verifying content sources. Encouraging the development of tools and standards for media authentication can help maintain trust in digital content. Ensuring that viewers can distinguish between genuine and manipulated material is crucial for preserving the integrity of information.

- Disclosure Requirements: Some jurisdictions may mandate clear labeling or disclaimers for deepfake content to prevent misinformation. These rules aim to ensure viewers are aware they are engaging with manipulated material. Disclosure requirements help mitigate the risk of deception and provide transparency about the nature of the content. Implementing such regulations can enhance public awareness and reduce the potential for deepfake misuse.

- Criminal Misuse: Deepfakes can be weaponized for criminal activities like identity theft, fraud, or political manipulation. Legal frameworks must address these risks and impose appropriate penalties for malicious use. Developing specific laws to tackle criminal deepfake activities is essential for preventing abuse and ensuring accountability. Effective legal measures are needed to deter and penalize those who exploit deepfake technology for illicit purposes.

- Cross-Border Challenges: Deepfakes can easily cross national borders, complicating efforts to regulate and control them. Harmonizing regulations globally is crucial to effectively combat misuse. International cooperation and standardized legal frameworks can help address the global nature of deepfake threats. By aligning regulatory approaches, countries can enhance their ability to manage and mitigate the risks associated with deepfakes on a worldwide scale.

XIV. INDIA'S APPROACH TO DEEPFAKES

- Existing Laws: In India, existing laws such as Sections 67 and 67A of the Information Technology Act (2000) address aspects of deepfakes related to defamation and explicit material dissemination. Section 67 criminalizes the transmission of obscene material, which could apply to deepfake content that is sexually explicit. Section 67A specifically targets sexually explicit content, potentially covering certain deepfake scenarios.

- Defamation Provision: Section 500 of the Indian Penal Code (1860) deals with defamation and provides legal recourse for individuals harmed by false statements. This provision can be applied to deepfake cases where manipulated content damages an individual’s reputation, offering a path for legal redress.

- Personal Data Protection Bill (2022): The Personal Data Protection Bill aims to safeguard personal data but does not explicitly address deepfakes. While it provides some protection against misuse of personal data, it lacks specific provisions for the challenges posed by deepfake technology.

- Lack of Comprehensive Legal Framework: India currently lacks a comprehensive legal framework specifically targeting deepfakes. The existing laws do not fully address the complex implications of deepfakes for privacy, social stability, national security and democracy. There is a need for targeted regulations to effectively manage and mitigate the risks associated with deepfake technology.

Conclusion

In the digital age, deepfakes are crucial because they combine technological advancement with moral considerations. They reflect the complex connection our society has with truth in the digital era which has an impact on legal frameworks, cultural norms, and individual rights. Deepfake problems necessitate a coordinated approach from tech firms, policymakers and educational institutions. putting an emphasis on public awareness, fair policies, and the incorporation of ethical AI. Everyone is subject to the duty, which emphasizes the need of critical thinking and well-informed decision-making in the information-rich world of today. Our collaborative efforts are essential to building a digital environment based on integrity and trust.

References

[1] Seng, L. K., Mamat, N., Abas, H., & Ali, W. N. H. W. (2024). AI Integrity Solutions for Deepfake Identification and Prevention. Open International Journal of Informatics, 12(1), 35-46. [2] Shirish, A., & Komal, S. (2024). A Socio-Legal Inquiry on Deepfakes. California Western International Law Journal, 54(2), 6. [3] Asghar, J., & Aslam, M. U. Vigilance in the Age of AI: Safeguarding Against Emerging Cyber Risks. [4] Gambín, Á. F., Yazidi, A., Vasilakos, A., Haugerud, H., & Djenouri, Y. (2024). Deepfakes: current and future trends. Artificial Intelligence Review, 57(3), 64. [5] Montasari, R. (2024). Introduction: Cyberspace, Cyberterrorism and the International Security in the Fourth Industrial Revolution: Threats, Assessment and Responses. In Cyberspace, Cyberterrorism and the International Security in the Fourth Industrial Revolution: Threats, Assessment and Responses (pp. 1-15). Cham: Springer International Publishing. [6] Al-kfairy, M., Mustafa, D., Kshetri, N., Insiew, M., & Alfandi, O. (2024, August). Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. In Informatics (Vol. 11, No. 3, p. 58). MDPI. [7] Familoni, B. T. (2024). Cybersecurity challenges in the age of AI: theoretical approaches and practical solutions. Computer Science & IT Research Journal, 5(3), 703-724. [8] Mingo, H. C. (2024). The Emerging Cybersecurity Challenges With Artificial Intelligence. In Multisector Insights in Healthcare, Social Sciences, Society, and Technology (pp. 163-185). IGI Global. [9] Domenteanu, A., T?taru, G. C., Cr?ciun, L., Mol?nescu, A. G., Cotfas, L. A., & Delcea, C. (2024). Living in the Age of Deepfakes: A Bibliometric Exploration of Trends, Challenges, and Detection Approaches. Information, 15(9), 525. [10] Schreiber, A., & Schreiber, I. (2024). Bridging knowledge gap: the contribution of employees’ awareness of AI cyber risks comprehensive program to reducing emerging AI digital threats. Information & Computer Security. [11] Tremont, T. M. (2023). Human-AI: Using Threat Intelligence to Expose Deepfakes and the Exploitation of Psychology (Doctoral dissertation, Capitol Technology University). [12] Mustak, M., Salminen, J., Mäntymäki, M., Rahman, A., & Dwivedi, Y. K. (2023). Deepfakes: Deceptions, mitigations, and opportunities. Journal of Business Research, 154, 113368. [13] Leone, M. (2023). The spiral of digital falsehood in deepfakes. International Journal for the Semiotics of Law-Revue internationale de Sémiotique juridique, 36(2), 385-405. [14] Treleaven, P., Barnett, J., Brown, D., Bud, A., Fenoglio, E., Kerrigan, C., ... & Schoernig, M. (2023). The future of cybercrime: AI and emerging technologies are creating a cybercrime tsunami. SSRN. [15] Williamson, S. M., & Prybutok, V. (2024). The Era of Artificial Intelligence Deception: Unraveling the Complexities of False Realities and Emerging Threats of Misinformation. Information, 15(6), 299. [16] Wach, K., Duong, C. D., Ejdys, J., Kazlauskait?, R., Korzynski, P., Mazurek, G., ... & Ziemba, E. (2023). The dark side of generative artificial intelligence: A critical analysis of controversies and risks of ChatGPT. Entrepreneurial Business and Economics Review, 11(2), 7-30. [17] Akhtar, Z., Pendyala, T. L., & Athmakuri, V. S. (2024). Video and Audio Deepfake Datasets and Open Issues in Deepfake Technology: Being Ahead of the Curve. Forensic Sciences, 4(3), 289-377. [18] ?abuz, M. (2023). Regulating deep fakes in the artificial intelligence act. Applied Cybersecurity & Internet Governance, 2(1), 1-42. [19] Vaseashta, A. (2023). Existential Risks Associated with Dual-Use Technologies,\". Intersections, Reinforcements, Cascades, 156-170. [20] Qureshi, S. M., Saeed, A., Almotiri, S. H., Ahmad, F., & Al Ghamdi, M. A. (2024). Deepfake forensics: a survey of digital forensic methods for multimodal deepfake identification on social media. PeerJ Computer Science, 10, e2037. [21] Qureshi, S. M., Saeed, A., Almotiri, S. H., Ahmad, F., & Al Ghamdi, M. A. (2024). Deepfake forensics: a survey of digital forensic methods for multimodal deepfake identification on social media. PeerJ Computer Science, 10, e2037. [22] George, A. S. (2023). Regulating Deepfakes to Protect Indian Elections. Partners Universal Innovative Research Publication, 1(2), 75-92. [23] Al-kfairy, M., Mustafa, D., Kshetri, N., Insiew, M., & Alfandi, O. A Systematic Review and Analysis of Ethical Challenges of Generative Ai: An Interdisciplinary Perspective. Available at SSRN 4833030. [24] Yigit, Y., Buchanan, W. J., Tehrani, M. G., & Maglaras, L. (2024). Review of generative ai methods in cybersecurity. arXiv preprint arXiv:2403.08701. [25] Bakir, V., Laffer, A., McStay, A., Miranda, D., & Urquhart, L. (2024). On Manipulation by Emotional AI: UK Adults\' Views and Governance Implications. Frontiers in Sociology, 9, 1339834. [26] Sison, A. J. G., Daza, M. T., Gozalo-Brizuela, R., & Garrido-Merchán, E. C. (2023). ChatGPT: more than a “weapon of mass deception” ethical challenges and responses from the human-centered artificial intelligence (HCAI) perspective. International Journal of Human–Computer Interaction, 1-20. [27] Almeida, F. (2023). Prospects of cybersecurity in smart cities. Future Internet, 15(9), 285. [28] Lindsay, G., Brown, J. C., Johnson, B. D., Owens, C., Hall, A., & Carrott, J. H. (2023). Microtargeting Unmasked: Safeguarding Law Enforcement, the Military, and the Nation in the Era of Personalized Threats. [29] Naitali, A., Ridouani, M., Salahdine, F., & Kaabouch, N. (2023). Deepfake attacks: Generation, detection, datasets, challenges, and research directions. Computers, 12(10), 216. [30] Mateo, E. (2023). A Deep Dive into Artificial Intelligence and Its Integration into Cybersecurity (Master\'s thesis, Utica University).

Copyright

Copyright © 2024 Kaif Qureshi, Akshat Verma, Harsh Vachheta, Anant Singh, Zaid Khan Pathan. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64202

Publish Date : 2024-09-10

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online